Portal maintenance status: (September 2019)

|

The Computer Programming Portal

Computer programming or coding is the composition of sequences of instructions, called programs, that computers can follow to perform tasks. It involves designing and implementing algorithms, step-by-step specifications of procedures, by writing code in one or more programming languages. Programmers typically use high-level programming languages that are more easily intelligible to humans than machine code, which is directly executed by the central processing unit. Proficient programming usually requires expertise in several different subjects, including knowledge of the application domain, details of programming languages and generic code libraries, specialized algorithms, and formal logic.

Auxiliary tasks accompanying and related to programming include analyzing requirements, testing, debugging (investigating and fixing problems), implementation of build systems, and management of derived artifacts, such as programs' machine code. While these are sometimes considered programming, often the term software development is used for this larger overall process – with the terms programming, implementation, and coding reserved for the writing and editing of code per se. Sometimes software development is known as software engineering, especially when it employs formal methods or follows an engineering design process. (Full article...)

Selected articles - load new batch

-

Image 1

Augusta Ada King, Countess of Lovelace (née Byron; 10 December 1815 – 27 November 1852), also known as Ada Lovelace, was an English mathematician and writer chiefly known for work on Charles Babbage's proposed mechanical general-purpose computer, the analytical engine. She was the first to recognise the machine had applications beyond pure calculation. Lovelace is often considered the first computer programmer.

Lovelace was the only legitimate child of poet Lord Byron and reformer Anne Isabella Milbanke. Lord Byron separated from his wife a month after Ada was born, and died when she was eight. Although often ill in childhood, Lovelace pursued her studies assiduously. She married William King in 1835. King was a Baron, and was created Viscount Ockham and 1st Earl of Lovelace in 1838. The name Lovelace was chosen because Ada was descended from the extinct Baron Lovelaces. The title given to her husband thus made Ada the Countess of Lovelace.

Lovelace's educational and social exploits brought her into contact with scientists such as Andrew Crosse, Charles Babbage, David Brewster, Charles Wheatstone and Michael Faraday, and the author Charles Dickens, contacts which she used to further her education. Lovelace described her approach as "poetical science" and herself as an "Analyst (& Metaphysician)". When she was eighteen, Lovelace's mathematical talents led her to a long working relationship and friendship with fellow British mathematician Charles Babbage. She was particularly interested in Babbage's work on the analytical engine. Lovelace first met him on 5 June 1833, when she and her mother attended one of Charles Babbage's Saturday night soirées with their mutual friend, and Lovelace's private tutor, Mary Somerville. (Full article...) -

Image 2

C# (/ˌsiː ˈʃɑːrp/ see SHARP) is a general-purpose high-level programming language supporting multiple paradigms. C# encompasses static typing, strong typing, lexically scoped, imperative, declarative, functional, generic, object-oriented (class-based), and component-oriented programming disciplines.

The principal designers of the C# programming language were Anders Hejlsberg, Scott Wiltamuth, and Peter Golde from Microsoft. It was first widely distributed in July 2000 and was later approved as an international standard by Ecma (ECMA-334) in 2002 and ISO/IEC (ISO/IEC 23270 and 20619) in 2003. Microsoft introduced C# along with .NET Framework and Microsoft Visual Studio, both of which are, technically speaking, closed-source. At the time, Microsoft had no open-source products. Four years later, in 2004, a free and open-source project called Mono began, providing a cross-platform compiler and runtime environment for the C# programming language. A decade later, Microsoft released Visual Studio Code (code editor), Roslyn (compiler), and the unified .NET platform (software framework), all of which support C# and are free, open-source, and cross-platform. Mono also joined Microsoft but was not merged into .NET.

As of November 2025,[update] the most recent stable version of the language is C# 14. (Full article...) -

Image 3Structured Query Language (SQL) (pronounced /ˌɛsˌkjuˈɛl/ S-Q-L; or alternatively as /ˈsiːkwəl/ ⓘ "sequel") is a domain-specific language used to manage data, especially in a relational database management system (RDBMS). It is particularly useful in handling structured data, i.e., data incorporating relations among entities and variables.

Introduced in the 1970s, SQL offered two main advantages over older read–write APIs such as ISAM or VSAM. Firstly, it introduced the concept of accessing many records with one single command. Secondly, it eliminates the need to specify how to reach a record, i.e., with or without an index.

Originally based upon relational algebra and tuple relational calculus, SQL consists of many types of statements, which may be informally classed as sublanguages, commonly: data query language (DQL), data definition language (DDL), data control language (DCL), and data manipulation language (DML). (Full article...) -

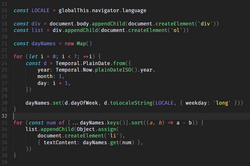

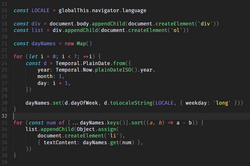

Image 4

Source code for a computer program written in the JavaScript language. It demonstrates the appendChild method. The method adds a new child node to an existing parent node. It is commonly used to dynamically modify the structure of an HTML document.

A computer program is a sequence or set of instructions in a programming language for a computer to execute. It is one component of software, which also includes documentation and other intangible components.

A computer program in its human-readable form is called source code. Source code needs another computer program to execute because computers can only execute their native machine instructions. Therefore, source code may be translated to machine instructions using a compiler written for the language. (Assembly language programs are translated using an assembler.) The resulting file is called an executable. Alternatively, source code may execute within an interpreter written for the language.

If the executable is requested for execution, then the operating system loads it into memory and starts a process. The central processing unit will soon switch to this process so it can fetch, decode, and then execute each machine instruction. (Full article...) -

Image 5

Linus Benedict Torvalds (born 28 December 1969) is a Finnish and American software engineer who is the creator and lead developer of the Linux kernel since 1991. He also created the distributed version control system Git.

Torvalds was one of the recipients of the 2012 Millennium Technology Prize "in recognition of his creation of a new open source operating system for computers leading to the widely used Linux kernel". He is also the recipient of the 2014 IEEE Computer Society Computer Pioneer Award and the 2018 IEEE Masaru Ibuka Consumer Electronics Award. (Full article...) -

Image 6Prolog is a logic programming language that has its origins in artificial intelligence, automated theorem proving, and computational linguistics.

Prolog has its roots in first-order logic, a formal logic. Unlike many other programming languages, Prolog is intended primarily as a declarative programming language: the program is a set of facts and rules, which define relations. A computation is initiated by running a query over the program.

Prolog was one of the first logic programming languages and remains the most popular such language today, with several free and commercial implementations available. The language has been used for theorem proving, expert systems, term rewriting, type systems,, automated planning,, and question answering as well as its original intended field of use, natural language processing. (Full article...) -

Image 7Object Pascal is an extension to the programming language Pascal that provides object-oriented programming (OOP) features such as classes and methods.

The language was originally developed by Apple Computer as Clascal for the Lisa Workshop development system. As Lisa gave way to Macintosh, Apple collaborated with Niklaus Wirth, the author of Pascal, to develop an officially standardized version of Clascal. This was renamed Object Pascal. Through the mid-1980s, Object Pascal was the main programming language for early versions of the MacApp application framework. The language lost its place as the main development language on the Mac in 1991 with the release of the C++-based MacApp 3.0. Official support ended in 1996.

Symantec also developed a compiler for Object Pascal for their Think Pascal product, which could compile programs much faster than Apple's own Macintosh Programmer's Workshop (MPW). Symantec then developed the Think Class Library (TCL), based on MacApp concepts, which could be called from both Object Pascal and THINK C. The Think suite largely displaced MPW as the main development platform on the Mac in the late 1980s. (Full article...) -

Image 8

Scala (/ˈskɑːlɑː/ SKAH-lah) is a strongly statically typed high-level general-purpose programming language that supports both object-oriented programming and functional programming. Designed to be concise, many of Scala's design decisions are intended to address criticisms of Java.

Scala source code can be compiled to Java bytecode and run on a Java virtual machine (JVM). Scala can also be transpiled to JavaScript to run in a browser, or compiled directly to a native executable using Clang. When running on the JVM, Scala provides language interoperability with Java so that libraries written in either language may be referenced directly in Scala or Java code. Like Java, Scala is object-oriented, and uses a syntax termed curly-brace which is similar to the language C. Since Scala 3, there is also an option to use the off-side rule (indenting) to structure blocks, and its use is advised. Martin Odersky has said that this turned out to be the most productive change introduced in Scala 3.

Unlike Java, Scala has many features of functional programming languages (like Scheme, Standard ML, and Haskell), including currying, immutability, lazy evaluation, and pattern matching. It also has an advanced type system supporting algebraic data types, covariance and contravariance, higher-order types (but not higher-rank types), anonymous types, operator overloading, optional parameters, named parameters, raw strings, and an experimental exception-only version of algebraic effects that can be seen as a more powerful version of Java's checked exceptions. (Full article...) -

Image 9

tcsh and sh shell windows on a Mac OS X Leopard desktop

A Unix shell is a shell that provides a command-line user interface for a Unix-like operating system. A Unix shell provides a command language that can be used either interactively or for writing a shell script. A user typically works within a Unix shell via a terminal emulator; however, direct access via serial hardware connections or a Secure Shell are common for server systems. Although use of a Unix shell is popular with some users, others prefer to use a graphical shell in a windowing system, such as those provided in desktop Linux distributions or macOS, instead of a command-line interface (CLI).

A user may have access to multiple Unix shells with one configured to run by default when the user logs in interactively. The default selection is typically stored in a user's profile (for example, in the local passwd file or in a distributed configuration system such as NIS or LDAP). A user may use other shells nested inside the default shell.

A Unix shell may provide many features including: variable definition and substitution, command substitution, filename wildcarding, stream piping, control flow structures (condition-testing and iteration), working directory context, and here document. (Full article...) -

Image 10

PDP-11 CPU board

Computer hardware includes the physical parts of a computer, such as the central processing unit (CPU), random-access memory (RAM), motherboard, computer data storage, graphics card, sound card, and computer case. It includes external devices such as a monitor, mouse, keyboard, and speakers.

By contrast, software is a set of written instructions that can be stored and run by hardware. Hardware derived its name from the fact it is hard or rigid with respect to changes, whereas software is soft because it is easy to change.

Hardware is typically directed by the software to execute any command or instruction. A combination of hardware and software forms a usable computing system, although other systems exist with only hardware. (Full article...) -

Image 11Artificial intelligence (AI) is the capability of computational systems to perform tasks typically associated with human intelligence, such as learning, reasoning, problem-solving, perception, and decision-making. It is a field of research in computer science that develops and studies methods and software that enable machines to perceive their environment and use learning and intelligence to take actions that maximize their chances of achieving defined goals.

High-profile applications of AI include advanced web search engines (e.g., Google Search); recommendation systems (used by YouTube, Amazon, and Netflix); virtual assistants (e.g., Google Assistant, Siri, and Alexa); autonomous vehicles (e.g., Waymo); generative and creative tools (e.g., language models and AI art); and superhuman play and analysis in strategy games (e.g., chess and Go). However, many AI applications are not perceived as AI: "A lot of cutting edge AI has filtered into general applications, often without being called AI because once something becomes useful enough and common enough it's not labeled AI anymore."

Various subfields of AI research are centered around particular goals and the use of particular tools. The traditional goals of AI research include learning, reasoning, knowledge representation, planning, natural language processing, perception, and support for robotics. To reach these goals, AI researchers have adapted and integrated a wide range of techniques, including search and mathematical optimization, formal logic, artificial neural networks, and methods based on statistics, operations research, and economics. AI also draws upon psychology, linguistics, philosophy, neuroscience, and other fields. Some companies, such as OpenAI, Google DeepMind and Meta, aim to create artificial general intelligence (AGI) – AI that can complete virtually any cognitive task at least as well as a human. (Full article...) -

Image 12Programming languages are used for controlling the behavior of a machine (often a computer). Like natural languages, programming languages follow rules for syntax and semantics.

There are thousands of programming languages and new ones are created every year. Few languages ever become sufficiently popular that they are used by more than a few people, but professional programmers may use dozens of languages in a career.

Most programming languages are not standardized by an international (or national) standard, even widely used ones, such as Perl or Standard ML (despite the name). Notable standardized programming languages include ALGOL, C, C++, JavaScript (under the name ECMAScript), Smalltalk, Prolog, Common Lisp, Scheme (IEEE standard), ISLISP, Ada, Fortran, COBOL, SQL, and XQuery. (Full article...) -

Image 13

The Greek lowercase omega (ω) character is used to represent Null in database theory.

In the SQL database query language, null or NULL is a special marker used to indicate that a data value does not exist in the database. Introduced by the creator of the relational database model, E. F. Codd, SQL null serves to fulfill the requirement that all true relational database management systems (RDBMS) support a representation of "missing information and inapplicable information". Codd also introduced the use of the lowercase Greek omega (ω) symbol to represent null in database theory. In SQL,NULLis a reserved word used to identify this marker.

A null should not be confused with a value of 0. A null indicates a lack of a value, which is not the same as a zero value. For example, consider the question "How many books does Adam own?" The answer may be "zero" (we know that he owns none) or "null" (we do not know how many he owns). In a database table, the column reporting this answer would start with no value (marked by null), and it would not be updated with the value zero until it is ascertained that Adam owns no books.

In SQL, null is a marker, not a value. This usage differs from most programming languages, where a null value of a reference means it is not pointing to any object. (Full article...) -

Image 14Logotype used on the cover of the first edition of The C Programming Language

C is a general-purpose programming language created in the 1970s by Dennis Ritchie. By design, C gives the programmer relatively direct access to the features of the typical CPU architecture, customized for the target instruction set. It has been and continues to be used to implement operating systems (especially kernels), device drivers, and protocol stacks, but its use in application software has been decreasing. C is used on computers that range from the largest supercomputers to the smallest microcontrollers and embedded systems.

A successor to the programming language B, C was originally developed at Bell Labs by Ritchie between 1972 and 1973 to construct utilities running on Unix. It was applied to re-implementing the kernel of the Unix operating system. During the 1980s, C gradually gained popularity. It has become one of the most widely used programming languages, with C compilers available for practically all modern computer architectures and operating systems. The book The C Programming Language, co-authored by the original language designer, served for many years as the de facto standard for the language. C has been standardized since 1989 by the American National Standards Institute (ANSI) and, subsequently, jointly by the International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC).

C is an imperative procedural language, supporting structured programming, lexical variable scope, and recursion, with a static type system. It was designed to be compiled to provide low-level access to memory and language constructs that map efficiently to machine instructions, all with minimal runtime support. Despite its low-level capabilities, the language was designed to encourage cross-platform programming. A standards-compliant C program written with portability in mind can be compiled for a wide variety of computer platforms and operating systems with few changes to its source code. (Full article...) -

Image 15

The source code for a computer program in C. The gray lines are comments that explain the program to humans. When compiled and run, it will give the output "Hello, world!".

A programming language is an engineered language for expressing computer programs.

Programming languages typically allow software to be written in a human readable manner.

Execution of a program requires an implementation. There are two main approaches for implementing a programming language – compilation, where programs are compiled ahead-of-time to machine code, and interpretation, where programs are directly executed. In addition to these two extremes, some implementations use hybrid approaches such as just-in-time compilation and bytecode interpreters. (Full article...)

Selected images

-

Image 1Partial view of the Mandelbrot set. Step 1 of a zoom sequence: Gap between the "head" and the "body" also called the "seahorse valley".

-

Image 2GNOME Shell, GNOME Clocks, Evince, gThumb and GNOME Files at version 3.30, in a dark theme

-

Image 3A head crash on a modern hard disk drive

-

Image 4An IBM Port-A-Punch punched card

-

Image 5A lone house. An image made using Blender 3D.

-

Image 6Deep Blue was a chess-playing expert system run on a unique purpose-built IBM supercomputer. It was the first computer to win a game, and the first to win a match, against a reigning world champion under regular time controls. Photo taken at the Computer History Museum.

-

Image 7Output from a (linearised) shallow water equation model of water in a bathtub. The water experiences 5 splashes which generate surface gravity waves that propagate away from the splash locations and reflect off of the bathtub walls.

-

Image 8Grace Hopper at the UNIVAC keyboard, c. 1960. Grace Brewster Murray: American mathematician and rear admiral in the U.S. Navy who was a pioneer in developing computer technology, helping to devise UNIVAC I. the first commercial electronic computer, and naval applications for COBOL (common-business-oriented language).

-

Image 9Partial map of the Internet based on the January 15, 2005 data found on opte.org. Each line is drawn between two nodes, representing two IP addresses. The length of the lines are indicative of the delay between those two nodes. This graph represents less than 30% of the Class C networks reachable by the data collection program in early 2005.

-

Image 10This image (when viewed in full size, 1000 pixels wide) contains 1 million pixels, each of a different color.

-

Image 12Margaret Hamilton standing next to the navigation software that she and her MIT team produced for the Apollo Project.

-

Image 13Stephen Wolfram is a British-American computer scientist, physicist, and businessman. He is known for his work in computer science, mathematics, and in theoretical physics.

-

Image 17A view of the GNU nano Text editor version 6.0

-

Image 18Ada Lovelace was an English mathematician and writer, chiefly known for her work on Charles Babbage's proposed mechanical general-purpose computer, the Analytical Engine. She was the first to recognize that the machine had applications beyond pure calculation, and to have published the first algorithm intended to be carried out by such a machine. As a result, she is often regarded as the first computer programmer.

Did you know? - load more entries

- ... that both Thackeray and Longfellow bought paintings by Fanny Steers?

- ... that NATO was once targeted by a group of "gay furry hackers"?

- ... that the programming language Acorn System BASIC was so non-standard that one commenter suggested that using it on the BBC Micro would be a disaster?

- ... that the Gale–Shapley algorithm was used to assign medical students to residencies long before its publication by Gale and Shapley?

- ... that it took a particle accelerator and machine-learning algorithms to extract the charred text of PHerc. Paris. 4 without unrolling it?

- ... that Perfectly Imperfect's social media platform has a main feed that is presented in reverse chronological order and is not algorithmically curated?

Subcategories

WikiProjects

- There are many users interested in computer programming, join them.

Computer programming news

No recent news

Topics

Related portals

Associated Wikimedia

The following Wikimedia Foundation sister projects provide more on this subject:

-

Commons

Free media repository -

Wikibooks

Free textbooks and manuals -

Wikidata

Free knowledge base -

Wikinews

Free-content news -

Wikiquote

Collection of quotations -

Wikisource

Free-content library -

Wikiversity

Free learning tools -

Wiktionary

Dictionary and thesaurus